How many times per week should a muscle be trained to maximize muscle hypertrophy? New meta-analysis review

Training frequency is a controversial topic. Several studies find benefits of training a muscle more times per week, yet others many find it doesn’t matter. Which studies are right? One way to answer this question is by conducting a meta-analysis to get a sort of ‘weighted average’ of the whole literature. Two of my esteemed friends and colleagues, Brad Schoenfeld and James Krieger, teamed up with Jozo Grgic to do just this. They published their findings in a new study titled “How many times per week should a muscle be trained to maximize muscle hypertrophy? A systematic review and meta-analysis of studies examining the effects of resistance training frequency”.

The main finding of the analysis was that training frequency did not influence muscle growth in studies where total work was equated between groups, but in studies without total work equation, higher frequencies led to more muscle growth. This is in line with the current thinking on the topic by many scientists. As per my lecture at the 2017 HPC Conference (now free for the public), mechanical tension is the primary driver of muscle growth. For greater muscle growth, a muscle needs to be subjected to either higher levels of tension or it should be subjected to a given tension for a longer duration. A higher training volume – in this case typically meaning more reps per set – normally achieves greater time under tension and can thereby increase muscle growth, but it’s not a (strong) independent driver of growth.

Practical application

Based on this theory and meta-analysis, given a certain work volume, how often you train a muscle group per week doesn’t matter much. However, this does not answer the review’s primary research question: “How many times per week should a muscle be trained to maximize muscle hypertrophy?”

If you train a muscle group more often, you increase total work output. Many people, including scientists and not just the authors of this review, continuously neglect this crucial fact. If it doesn’t click, consider a typical bro leg day performed once a week on Wednesday: 3 sets for as many reps as possible of squats, deadlifts, leg presses and leg extensions. Now put the deadlifts and leg extensions on Friday and move the squats and leg presses to Monday. You’re obviously going to be able to achieve a higher total work output (weight x reps x sets) with the split workout compared to the all-in-one workout, because you’re performing 3 of the 4 exercises in a much less fatigued condition. How many reps of leg presses at say 70% of 1RM can you do after squats and deadlifts? You’re gassed. I sometimes see clients double their repetition performance, such as going from 5 to 10 reps, when they move an exercise to its own day instead of performing it after several other exercises for its target muscle groups.

Equating total work with a higher vs. a lower training frequency and a given set volume effectively requires that the higher training frequency groups trains with less effort. In spite of this, many studies pretend their participants trained to failure and that total work was equated. This is basically impossible. For example, Schoenfeld et al. (2015), which found significantly greater muscle growth when training a muscle 3x per week than 2x, noted “Over the course of each training week, all subjects performed the same exercises and repetition volume throughout the duration of the study.” This should mean the higher frequency group was restricted to perform more reps than the lower frequency group. However, they then contradict this with: “Sets were carried out to the point of momentary concentric muscular failure—the inability to perform another concentric repetition while maintaining proper form.” You can’t guarantee the same repetition performance if you have people train to failure. I think they did indeed try to train to failure and the note that they equated reps was an oversight: they meant the participants stayed in the same repetition range, like 6-12 reps, which is more reflective of training intensity (%1RM) than total volume (work or sets). However, volume load was actually measured (it was a solid study) and as it turned out, training loads did not differ significantly per group. This was probably why the authors felt they could state it was work-equated. However, statistical power, the ability to detect differences as ‘statistically significant’ in the data, was a big concern here. I calculated the percentage difference between the groups in volume load and only the forearm flexors and extensors had comparable workloads. The higher frequency group achieved 15% more work for the chest, 9% more work for the back, 22% more work for the anterior thighs, 7% more work for the posterior thighs and 5% more work for the shoulders. Those differences may not be statistically significant, but they may be practically significant. (More on this later.)

Researchers in general should definitely be stricter with their definitions of training volume and muscle failure to prevent these discrepancies.

Knowing that higher frequencies inherently increase training volume (work) and that in non-work-equated conditions higher training frequencies lead to more muscle growth, the practical conclusion to me seems that it’s a good idea to train your muscles more often. However, in this review, all non-work-equated studies were analyzed together, meaning in some studies people did entire extra training days, such as by performing a given full-body workout once or twice per week, which basically doubles the total volume in terms of not just work but also sets. As such, this part of the analysis mixes the effects of set volume and work volume and cannot tell us if it’s truly beneficial to spread out your sets across the week to achieve more work, which is the practical question for lifters.

To muddle the waters further, the meta-analysis defined volume not as work but as sets x reps. This would ignore the fact one group could progress faster in load than the other. They’d still achieve the same sets x reps, but one group may achieve a higher total work volume (sets x reps x weights). This also makes it more likely one group, generally the higher frequency group, was not training with the same level of effort, as they may have been content to hit their rep target for the day with a lower weight than they could. For example, Zaroni et al. (2018) was classified in the meta-analysis as a volume-equated study, but it was in fact not repetition equated. The authors were careful to avoid saying this and correctly referred to the programs as having the same “target repetition range”. This distinction matters, because as it turned out, 5 days of training performed as full-body sessions instead of as a split program led to significantly greater total work: 22% to be precise. And as it turned out, training a muscle 5x per week also led to significantly greater muscle growth than training a muscle twice per week.

As such, this meta-analysis cannot answer the practical question in its title: how often should you train a muscle for maximum results? The relevant comparison in practice is set- but not work- or repetition-equated studies. This is what Greg Nuckols did in his recent unofficial meta-analysis on training frequency, which found significantly greater muscle growth with higher training frequencies.

Since Greg’s analysis wasn’t published, I think a new analysis is in order. In addition to the difference in definition of training volume, I’d like to see the inclusion criteria changed to exclude confounded studies.

Inclusion criteria

Specifically, these 2 studies should not be included in the analysis.

- Gentil et al. (2018): As the authors happily noted, “Interestingly, all participants of the present study have been training at higher frequencies (exactly the same protocol performed by G1) for at least four months before the study.” G1 is an error and should say G2 here, but more importantly, the authors may find this interesting, but combined with the effect the higher frequency group actually lost strength and gained no muscle size whatsoever, it’s clear that they had completely plateaued on the program. Having one group continue on their current program defeats the purpose of a randomized controlled experiment, which aims to isolate the effect of the exercise intervention and eliminate confounding factors.

If we’re going to include crappy studies, we should also include good but unpublished studies like the Norwegian Frequency Project, in which training a muscle 6x led to more muscle growth than 3x in the Norwegian national powerlifting team, and Heke (2010), in which the 3x weekly full-body group experienced a 0.8% increase in fat-free mass and a 3.8% decrease in body fat percentage, compared to only a 0.4% increase in FFM and a 2.2% decrease in BF% in the bro split group. The differences weren’t statistically significant, but that was probably mainly because these guys were already benching over 4 plates and the study lasted only 4 weeks.

- Tavares et al. (2017): This was a detraining study. The participants were only ‘strength trained’ because they had performed an 8-week training phase beforehand and the intervention actually consisted of the detraining phase. The study basically looked at whether you could maintain newbie gains with just one workout per week with 4 sets or whether it was better to have 2 workouts with 2 sets each. Muscle size non-significantly decreased in both groups with no significant difference between the groups. Cool. But not relevant for trained individuals looking to, as the meta-analysis is titled, ‘maximize muscle hypertrophy’.

I’d also remove the criterion that excludes concurrent training studies from the analysis. If both groups performed the same concurrent training, this should not bias the results. A specific study that deserves inclusion due to its serious participants is Crewther et al. (2016). Here rugby players performed 3 workouts as either full-body workouts or in an upper/lower split, so the weekly training frequency was effectively 3x vs. 1.5x. The full-body group lost significantly more fat and in spite of that also gained a non-significantly greater amount of muscle (1.1% vs. 0.4% FFM).

The analysis

In addition to the change in inclusion criteria and definitions, I’d also like to see direct analyses of one frequency vs. others, not just all frequencies together and comparing high vs. low. Training a muscle 1x vs 2x may not have the same effect as 3x vs 6x.

Moreover, by putting all training frequencies together, you confound the analysis of the differences between frequencies with the differences between studies. As Greg Nuckols explained in his meta-analysis: “simply comparing the average hypertrophy seen with different frequencies doesn’t do anything to address the differences in hypertrophy observed between studies.” For example, if higher frequencies are mostly used in more trained populations, we see lower average muscle growth rates with higher frequencies because trained individuals inherently generally grow slower than untrained populations. By comparing effect sizes across studies regardless of frequency, we could therefore see lower muscle growth rates with higher frequencies in the total analysis result even though individual studies show benefits of higher frequencies.

Interpretation

The last point I’d like to touch on is mainly interesting for people interested in reading scientific papers, so if you just want the take-home message, it’s that I think this analysis needs to be redone to provide practically relevant information beyond what we already knew and to explain its discrepant results with Greg’s analysis.

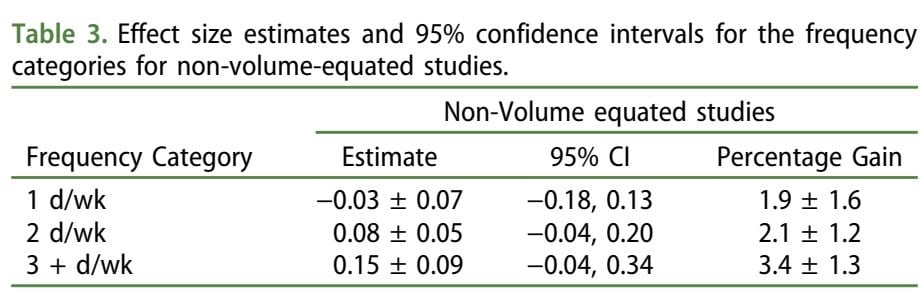

The analysis should show raw muscle growth rates or percentage gains, like Greg’s analysis. Effect sizes should be reported as accessory statistics, not as the primary measurements of practical relevance. Most analyses in the meta-analysis, including the overall volume-equated one and the one on trained individuals specifically, had positive effect sizes in favor of higher frequencies: around 0.07. For the lower body specifically, this bordered on statistical significance: p = 0.08. The authors discounted this as trivial. Even for the non-volume equated analysis, they reported: “the overall difference in the magnitude of effect between frequencies of 1 and 3+ times per week was modest (ES = 0.18), calling into question the practical benefit from the standpoint of increasing muscle mass”.

While common practice, it’s misguided to use effect sizes as measures of practical relevance for muscle growth. The Cohen measurement chart they use to interpret the size of an effect comes from social sciences. Effect sizes are used instead of raw scores because it allows us to compare different measurement instruments that aren’t easily interpretable. For example, what does a 5% difference for a Stroop test result mean in practice for how intelligent someone is? And how do you compare this to a 3-point difference on a survey with a Likert scale? You can’t, because they’re such different measures. Effect sizes measure the change relative to the variance, which gives an abstract measure of the strength of something that we can compare across different measurement instruments and then interpret.

For muscle growth, we don’t need such a measure, because muscle growth is precisely what we’re interested in. Effect sizes here achieve the opposite of what we want: they make an analysis uninterpretable. If I tell you you can achieve a 0.18 greater effect size of muscle growth by going to the gym 3x per week instead of once, as the meta-analysis reports, what does that tell you? Not much, even if you’re a scientist. But now if I show you the raw results below, how does this change your interpretation?

50% more muscle growth, yes please! The practical effect is huge. In my experience, an advanced natural (!) trainee going to the gym once a week can expect basically nada in terms of results. Twice a week, maybe if you train like a beast. Three times a week, now we’re talking good progress. So the difference between one and three sessions is basically zero results vs. good results. In Greg’s analysis, even under set-equated conditions there was a roughly linear ~20% increase in muscle growth for each additional time we train a muscle per week. That’s certainly practically relevant for many trainees.

Here’s another nice example from Dankel et al. (2016) to illustrate how meaningless effect size differences can be. It shows how a considerable nearly 0.4 effect size difference in pre-post strength scores between 2 groups can occur when there is actually zero difference in average 1RM strength development between groups. The pre-post effect sizes are affected greatly by the variance in the data and comparing them is thus not always reflective of the actual difference between the groups.

Not only are effect sizes not suitable to interpret magnitudes of muscle growth, the Cohen interpretation standards from social sciences are not valid for exercise science in the first place. They are based on measures like personality and IQ, almost invariably in college students and generally for acute measures with across-group results. You cannot extrapolate those standards of what’s a ‘large effect’ to a longitudinal, within-group measure from an entirely different field of study, such as muscle growth.

I can’t fault the authors for relying on effect sizes to interpret magnitudes, as it’s common practice in exercise science. However, it shouldn’t be and exercise scientists should A) more carefully consider when effect sizes should be reported and which kinds and B) establish new criteria suitable for exercise science as to what constitutes small, medium and large effects.

Conclusion

For the results of this meta-analysis to answer the practical question on how often we should train, it should A) be redone with a comparison of set- but not work- or repetition-equated studies, B) the inclusion and exclusion criteria should be refined, C) the analysis should include comparisons of each frequency vs. others and D) the percentage muscle growth rate differences should be reported to interpret the practical relevance of the found differences.

As it stands, the literature is consistent with there being a small, probably contextual, positive effect of higher training frequencies even when total repetition volume is equated and a potentially much more meaningful increase in muscle growth when total work is not equated, as higher frequencies should result in a 5-25% greater work output based on the current literature. The proposed analysis should help clarify if the difference is indeed a highly relevant ~20% additional muscle growth per additional time we train a muscle per week, as per Greg’s analysis, or whether the difference is trivial, as the new meta-analysis authors suggest. The next question is when higher frequencies can be beneficial, as there are too many positive findings of higher frequencies to discount all of them as flukes.

New meta-analysis reference

How many times per week should a muscle be trained to maximize muscle hypertrophy? A systematic review and meta-analysis of studies examining the effects of resistance training frequency. Schoenfeld BJ, Grgic J, Krieger J. J Sports Sci. 2018 Dec 17:1-10. doi: 10.1080/02640414.2018.1555906.

Want more content like this?

Want more content like this?

Then get our free mini-course on muscle building, fat loss and strength.

By filling in your details you consent with our privacy policy and the way we handle your personal data.